Large language models are cultural technologies. What might that mean?

Four different perspectives

It’s been five months since Alison Gopnik, Cosma Shalizi, James Evans and myself wrote to argue that we should not think of Large Language Models (LLMs) as “intelligent, autonomous agents” paving the way to Artificial General Intelligence (AGI), but as cultural and social technologies. In the interim, these models have certainly improved on various metrics. However, even Sam Altman has started soft-pedaling the AGI talk. I repeat. Even Sam Altman.

So what does it mean to argue that LLMs are cultural (and social) technologies? This perspective pushes Singularity thinking to one side, so that changes to human culture and society are at the center. But that, obviously, is still too broad to be particularly useful. We need more specific ways of thinking - and usefully disagreeing - about the kinds of consequences that LLMs may have.

This post is an initial attempt to describe different ways in which people might usefully think about LLMs as cultural technologies. Some obvious provisos. It identifies four different perspectives; I’m sure there are more that I don’t know of, and there will certainly be more in the future. I’m much more closely associated with one of these perspectives than the others, so discount accordingly for bias. Furthermore, I may make mistakes about what other people think, and I surely exaggerate some of the differences between perspectives. Consider this post as less a definitive description of the state of debate than a one man presentation exchange that is supposed to reveal misinterpretations and clear the air so that proper debate can perhaps get going. Finally, I am very deliberately not enquiring into which of these approaches is right. Instead, by laying out their motivating ideas as clearly as I can, I hope to spur a different debate about when each of them is useful when and for which kinds of questions.

Gopnikism

I’m starting with this because for obvious reasons, it’s the one I know best. The original account is this one, by Eunice Yiu, Eliza Kosoy and Alison, which looks to bring together cognitive psychology with evolutionary theory. They suggest that LLMs face sharp limits in their ability to innovate usefully, because they lack direct contact with the real world. Hence, we should treat them not as agentic intelligences, but as “powerful new cultural technologies, analogous to earlier technologies like writing, print, libraries, internet search and even language itself.”

Behind “Gopnikism” lies the mundane observation that LLMs are powerful technologies for manipulating tokenized strings of letters. They swim in the ocean of human-produced text, rather than the world that text draws upon. Much the same is true, pari passu, for LLMs’ cousin-technologies which manipulate images, sound and video. That is why all of them are poorly suited to deal with the “inverse problem” of how to reconstruct “the structure of a novel, changing, external world from the data that we receive from that world.” As Yiu, Kosoy and Gopnik argue, the ways in which modern LLMs ‘learn’ (through massive and expensive training runs) is fundamentally different from how human children learn. What knowledge LLMs can access, relies on static statistical compressions of patterns in human generated cultural information.

Gopnikists are usually deeply skeptical of claims that we can arrive at truly agentic AI by scaling up compute and data. Instead, they suggest that we should understand LLMs in terms of their consequences for human culture. Just as written language, libraries and the like have shaped culture in the past, so too LLMs, their cousins and descendants are shaping culture now. Here, the emphasis is on culture as useful information about the world. Culture is the collective system of knowledge through which human beings gather and transmit information about aspects of their environment (including other humans) that impinge on their goals, survival, and ability to reproduce. Other animals have culture too, but humans are uniquely sophisticated in their ability to transmit and manipulate cultural knowledge, providing them with a variety of advantages. Humans - especially young humans - explore the world and discover useful and interesting information that they can transmit to others. We can consider cultural change as a Darwinian evolutionary process in which some kinds of knowledge propagate successfully (they are copied and reproduced with some degree of accuracy), while others wither away. There is no necessary reason to believe that all the knowledge that survives will be ‘good’ knowledge (errors can certainly persist), but we might surmise that on average it is likely to be somewhat helpful.

All this leads to an emphasis on the limits of LLMs. Since LLMs do not have a direct feedback between contact with physical reality and updating, they aren’t readily capable of the kinds of experimental learning that is the most crucial source of useful innovation. Children do better than LLMs at discovering “novel causal relationships” in the world. Hence, Gopnikism considers LLMs less as discoverers than as mechanisms of cultural transmission, affecting how cultural knowledge is passed from one human being to another. They are potentially useful because they “codify, summarize, and organize that information in ways that enable and facilitate transmission.” Equally, they may distort the information that they transmit, in arbitrary or systematic ways.

The emphasis on how culture is a store of valuable information about causal relationships in the real world, which have largely been generated by actual human beings experimenting most certainly doesn’t deny that culture may have other aspects, but those aspects are less relevant for the questions it asks.

As Alison and the rest of us suggest in the Science article, LLMs are not just channels of transmission. Cosma and I emphasize that they are “social technologies” as well as “cultural technologies.” Here, we hark back to the work of Herbert Simon and his colleagues on artificial systems for “complex information processing,” such as markets and bureaucracies, which commonly involve creating lossy simplifications of incomprehensibly complex wholes. Human culture, from this perspective, can be considered a vast treasury of “formulas, templates, conventions, and indeed tropes and stereotypes,” and LLMs as powerful machineries for summarizing, accessing, manipulating and combining them. James Evans and his collaborators explore how these technologies make previously inaccessible aspects of culture visible and tangible, enabling new forms of exploration and cultural discovery.

The strong implication is that these technologies will be consequential, and may turn out to be very useful, but in ways that depend on human culture and will continue to so depend. Manipulating cultural templates and conventions at scale can provide a lot of value added. As Simon repeatedly emphasized, individual human beings have very limited problem solving capacities, which is why, in practice, they outsource a lot of the action to bigger social systems. If tropes and stereotypes are doing a lot of our intellectual work for us, we might be able to do a lot more if we can combine them in new ways. Equally, tropes and stereotypes are the congealed cultural product of experimentation with the world, rather than volitional experimenters in their own right. Even when Gopnikism says that LLMs can do more than transmit, it emphasizes the fundamental differences between human (and animal) intelligence that is situated in the world, and cultural information that is situated at least one remove away from it.

Interactionism

Interactionist accounts of LLMs start from a similar (but not identical) take on culture as a store of collective knowledge, but a different understanding of change. Gopnikism builds on ideas about how culture evolves through lossy but relatively faithful processes of transmission. Interactionism instead emphasizes how humans are likely to interpret and interact with the outputs of LLMs, given how they understand the world. Importantly for present purposes, cultural objects are more likely to persist when they somehow click with the various specialized cognitive modules through which human intelligence perceives and interprets its environment, and indeed are likely to be reshaped to bring them more into line with what those modules lead us to expect.

From this perspective, then, the cultural consequences of LLMs will depend on how human beings interpret their outputs, which in turn will be shaped by the ways in which biological brains work. The term “interactionism” stems from this approach’s broader emphasis on human group dynamics but by a neat coincidence, their most immediate contribution to the cultural technology debate, as best as I can see it, rests on micro-level interactions between human beings and LLMs.

The idea is that transmission processes alone can’t explain how human culture changes. As Dan Sperber - perhaps the most important figure in this approach - argued in the 1990s, human culture is the product of an apparently unbounded space of human communications. There isn’t any neat process of Darwinian selection, in which ‘memes’ or some other units of culture reproduce or die out, but there are points of attraction within the apparent chaos. Some cultural constructs seem more likely to endure and be reproduced than others, either because they accord with broad features of the society that the culture subsists in, or because they map onto the mental modules through which we make sense of the world. All these constructs are shaped by our minds: stories, for example, may have their more awkward features rubbed away as they pass from person to person so that they converge on our expectations and archetypes.

All that may seem hopelessly abstract: here’s an example (which I’ve talked about before) that shows how it cashes out. Why do so many people, in so many different periods and societies, believe in a god or gods, invisible and powerful forces that shape the world that we live in? In In Gods We Trust, Scott Atran provides a plausible seeming interactionist account of why belief in gods is so common across different cultures. He argues that such beliefs are:

in part, by-products of a naturally selected cognitive mechanism for detecting agents — such as predators, protectors, and prey — and for dealing rapidly and economically with stimulus situations involving people and animals. This innate releasing mechanism is trip-wired to attribute agency to virtually any action that mimics the stimulus conditions of natural agents: faces on clouds, voices in the wind, shadow figures, the intentions of cars or computers, and so on.

In other words: our brains are so heavily wired to detect conscious agency in the world that we see it in places where it doesn’t actually exist. We posit the existence of gods and other supernatural agents so as to explain the complex workings of phenomena that we otherwise would have difficulty in explaining. Lightning happens when the thunder god is angry. And so on. Of course, the aside about the “intentions … of computers” has become more pungent since he wrote it.

Gods, demons, dryads, wood-woses and the like arise and persist as cultural phenomena, in part because they click with the cognitive modules through which our brains interpret the world. The specific form that they take will be influenced by some combination of chance and of the specific cultural ecology in which they arise. The myths of pre-Christian Ulster are different in crucial respects from those of the modern Bay Area. But there may still be important commonalities.

That, then, helps explain why LLMs challenge our ability to deal with agentic intelligence and the non-agentic world in new ways. As Sperber points out, “from birth onwards, human beings expect relevance from the sounds of speech (an expectation often disappointed but hardly ever given up).” This is pre-programmed into us, thanks to a past environment in which, obviously, there was little chance of encountering speech or communication that did not ultimately originate from some putatively conscious agent. That is not the world that is coming into being around us.

All this suggests that as well as focusing on copying and transmission, we want to think about reception. How will human beings interpret the outputs of LLMs, given both the cognitive modules that they use to interpret the world, and the specific cultural environments that they find themselves in? More pungently: how will humans interpret the outputs of a technology that trips our cognitive sensors for agentic intelligence without having any agency behind it?

On the one hand, interactionists like (Hugo) Mercier and Sperber point out that we have cognitive modules that seem adapted to detecting other people’s bullshit, so that we aren’t readily taken in by others’ claims. We are much better at detecting the flaws in other people’s arguments than our own; are better, even, according to some experimental evidence at detecting the flaws in our own arguments when they are presented as coming from other people. Thus, Felix Simon, Sacha Altay and Hugo Mercier see some reasons for optimism that generative AI will be less successful at producing convincing misinformation than many believe. In more recent work, Simon and Altay point out that we haven’t seen the kinds of AI-provoked democratic disasters that many predicted.

However, two other scholars suggest that LLM’s so-called ‘hallucinations’ may glide easily past people’s innate skepticism, because they are the model’s best guess (with a little added noise) as to what the most likely response would be. Their wrong guesses may sometimes seem more plausible to humans than the truth, which may be more various and more unexpected than the unexceptionable pabulum of the machine.

A possible social consequence of this analysis is thus, what we call the modal drift hypothesis: that, because our open vigilance mechanisms are not able to deal well with texts generated by large language models, who have no explicit intention to deceive us and which produce statements which pass our plausibility checking, the inclusion of language models as mass contributors to our information ecosystems could disrupt its quality, such that a discrepancy between the results of our intuitive judgements of the text’s truthfulness, and its actual accuracy will only grow.

And all this is set to get much odder, as humans and LLMs increasingly interact. We are already seeing tragic folies-a-deux unfold. We are likely to see new points of cultural attraction emerge from the interaction between human intelligence and machinic systems that straddle the organic and inorganic in unprecedented ways. Strange cults and home baked religions are a very safe bet. So too weird self-sustaining cognitive economies. Max Weber famously argued in the first decades of the 20th century that:

the ultimate and most sublime values have retreated from public life either into the transcendental realm of mystic life or into the brotherliness of direct and personal human relations. It is not accidental that our greatest art is intimate and not monumental, nor is it accidental that today only within the smallest and intimate circles, in personal human situations, in pianissimo, that something is pulsating that corresponds to the prophetic pneuma, which in former times swept through the great communities like a firebrand, welding them together.

In the first decades of the 21st, the prophetic pneuma is back. What next?

The interactionist perspective broadly encourages us to ask three questions. What is the cultural environment going to look like as LLMs and related technologies become increasingly important producers of culture? How are human beings, with their various cognitive quirks and oddities, likely to interpret and respond to these outputs? And what kinds of feedback loops are we likely to see between the first and the second?

Structuralism

I’ve recently written at length about Leif Weatherby’s recent book, Language Machines, which argues that classical structuralist theories of language provide a powerful theory of LLMs. This articulates a third approach to LLMs as cultural technologies. In contrast to Gopnikism, it doesn’t assume that culture’s value stems from its connection to the material world, and pushes back against the notion that we ought build a “ladder of reference” from reality on up. It also rejects interactionists’ emphasis on human cognitive mechanisms:

A theory of meaning for a language that somehow excludes cognition—or at least, what we have often taken for cognition—is required.

Further:

Cognitive approaches miss that the interesting thing about LLMs is their formal-semiotic properties independent of any “intelligence.”

Instead of the mapping between the world and learning, or between the architecture of LLMs and the architecture of human brains, it emphasizes the mappings between large scale systems. The most important is the mapping between the system of language and the statistical systems that can capture it, but it is interested in other systems too, such as bureaucracy.

Language models capture language as a cultural system, not as intelligence. … The new AI is constituted as and conditioned by language, but not as a grammar or a set of rules. Taking in vast swaths of real language in use, these algorithms rely on language in extenso: culture, as a machine.

The idea, then, is that language is a system, the most important properties of which do not depend on its relationship either to the world that it describes or to the intentions of the humans who employ it. Weatherby suggests that the advantage of structuralist and (much) post-structuralist thought, is that it allows you to examine LLMs in their own right, rather than in reference to something else, turning what Gopnikism sees as a flaw into an essential aspect of something strange and new.

Saussure cuts the sign in two, arguing that it is, in the case of language, made up of a “sound-image” and a “concept,” which he further restricts with the technical names “signifier” and “signified.” Much has been made of this walling off of language from direct world reference, but, in addition to being far more plausible than any reference-first theory, it fits the problem of a language-generating system that has no way to generate words by direct reference, like an LLM.

LLMs work as they do thanks to a remarkably usable mapping between two systems: the system of human language as it has been used and developed, and the system of statistical summarizations spat out by a transformer architecture, leading to a “merging of different structural orders.” While their cultural outputs are different from human cultural outputs (there is no direct intentionality behind them), they are, most emphatically, a kind of culture. That which we think of as human is itself in part the outcome of systems that are quite as impersonal and devoid of intent as any large language model. There is no original human essence that is untainted by system. In Herbert Simon’s terms, LLMs are systems of the artificial - but so too is most of human society.

The Eliza effect is a symptom of the depth of language in our cognitive apparatus. We think of it as parasitic on some original, but we have no content with which to fill in that original image. Lacking it, we bend ourselves forward and backward conceptually to deny, at any cost, that AI is simply generating culture.

And this in turn is bringing through a great transformation, building on previous such transformations, welding the various systems of society together in quite different ways than before, and obliging us to pay attention to things we have discounted or ignored.

LLMs force us to confront the problem of language not as reference or communication but as the interface between form and meaning, culture and art, ideology and insight. They are the most complete version of computational semiology to the present, and that is precisely because they instantiate language to a good approximation of its digital-cultural form. The datafication of everything stands to become qualitative, to form a linguistic-computational hinge for other forms of data processing and the extensive text-first yet multimedia inter face of our daily lives and global social processes.

This broad perspective does not entail specific views on whether these transformations are going to be good or bad for us. Elsewhere, Weatherby worries that LLMs may transform bureaucracies in adverse ways.

We could call it spreadsheet culture in hyperdrive, a world in which all data can be translated into summary language and all language into optimized data with nothing more than a prompt. But where spreadsheets had limited functionality, LLMs act as universal translators in the same arena. They have many flaws, but this core capacity is a step-change in the mundane world of modern bureaucracy. … AI’s power, danger, and limits are all in this banal world of rows and columns. It’s easy to overlook this, because we have spent the last three decades making virtually the whole world into a giant spreadsheet. With everything from your personal daily heartrate variations and financial trends to tics of speech and culture pre-formatted for an AI model, the power of this tool becomes immense.

Equally, Ted Underwood, who starts from a broadly similar understanding of these technologies, is guardedly enthusiastic about their possibilities.

writing allows us to take a step back from language, survey it, fine-tune it, and construct complex structures where one text argues with two others, each of which footnotes fifty others. It would be hard to imagine science without the ability writing provides to survey language from above and use it as building material.

Generative AI represents a second step change in our ability to map and edit culture. Now we can manipulate, not only specific texts and images, but the dispositions, tropes, genres, habits of thought, and patterns of interaction that create them. I don’t think we’ve fully grasped yet what this could mean.

More generally:

As we develop models trained on different genres, languages, or historical periods, these models could start to function as reference points in a larger space of cultural possibility that represents the differences between maps like the one above. It ought to be possible to compare different modes of thought, edit them, and create new adjectives (like style references) to describe directions in cultural space.

If we can map cultural space, could we also discover genuinely new cultural forms and new ways of thinking?… externalizing language, and fixing it in written marks, eventually allowed us to construct new genres (the scientific paper, the novel, the index) that required more sustained attention or more mobility of reference than the spoken word could support. Models of culture should similarly allow us to explore a new space of human possibility by stabilizing points of reference within it.

The structuralist approach then, examines how LLMs produce culture without volitional intent. Structuralists do not draw a sharp distinction between discovery and cultural transmission, because they are less interested in the ways in which culture discloses information about physical reality than in how human and non-human producers of culture become imbricated in the same systems. Similarly, they are less inclined to draw out the individual from the system to focus on their cognitive quirks than to emphasize the collective structures from which meaning emerges.

Role play

Weatherby is frustrated by the dominance of cognitive science in AI discussions. The last perspective on cultural technology that I am going to talk about argues that cognitive science has much more in common with Wittgenstein and Derrida than you might think. Murray Shanahan, Kyle McDonell and Laria Reynolds’ Nature article on the relationship between LLMs and “role play” starts from the profound differences between our assumptions about human intelligence and how LLMs work. Shanahan, in subsequent work, brings this in some quite unexpected directions.

I found this article a thrilling read. Admittedly, it played to my priors. I first came across LLMs in early/mid 2020 thanks to “AI Dungeon,” an early implementation of GPT-2, which used the engine to generate an infinitely iterated role-playing game, starting in a standard fantasy or science fiction setting. AI Dungeon didn’t work very well as a game, because it kept losing track of the underlying story. I couldn’t use it to teach my students about AI as I had hoped, because of its persistent tendency to swivel into porn. But it clearly demonstrated the possibility of something important, strange and new.

Shanahan, McDonell and Reynolds explain why LLMs are more like a massive, confusing “Choose Your Own Adventure” than a coherent unilinear intelligence. It’s the best short piece on what LLMs do that I’m aware of. The authors explain why LLM implementations are “superposition[s] of simulacra within a multiverse of possible characters.” The LLM draws upon a summarized version of all the characters, all the situations that those characters may find themselves in, and all the stock cultural shorthands that humans have come up with about such characters in such circumstances, all held in superposition with each other at once.

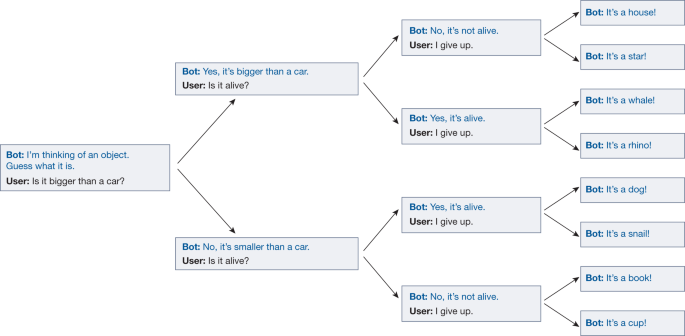

Shanahan, McDonell and Reynolds explain how this works, using the game “20 Questions.” When a human being plays this game, they begin by thinking about an object, and then answer questions about it, which may or may not lead their questioner to guess correctly. LLMs work differently.

In this analogy, the LLM does not ‘decide’ what the object is at the beginning of the question game. Instead, it begins with a very large number of possible objects in superposition. That number will narrow as the questions proceed, so that an ever smaller group of objects fit the criteria, until it fixes on a possible object in the final round, depending on ‘temperature’ and other factors. This illustrates how, in conversation, the LLM has a massive possible set of characters, situations or approaches in superposition that it can draw on, and will likely narrow in on a smaller set of possibilities as dialogue proceeds within the context window. Many of these characters, situations and approaches are cliches, because cliches are, pretty well by definition, going to be heavily over-represented in the LLM’s training set.

the training set provisions the language model with a vast repertoire of archetypes and a rich trove of narrative structure on which to draw as it ‘chooses’ how to continue a conversation, refining the role it is playing as it goes, while staying in character. The love triangle is a familiar trope, so a suitably prompted dialogue agent will begin to role-play the rejected lover. Likewise, a familiar trope in science fiction is the rogue AI system that attacks humans to protect itself. Hence, a suitably prompted dialogue agent will begin to role-play such an AI system.

As the flaws of AI Dungeon illustrate, raw LLMs and related forms of multimodal AI have a hard time keeping on the right narrative tracks, ensuring that they stick with the one character (e.g. a “helpful assistant”) rather than veering into an altogether different set of cultural expectations. Much of the fine tuning and reinforcement learning and many of the hidden prompt instructions are designed to lay better tracks - but these fixes may have unexpected cultural consequences. Trying to get an image generator to prioritize representing a wide variety of racial and cultural identities may produce Black Nazi stormtroopers. Trying to make Grok less woke may spawn MechaHitler. Contrary to much popular commentary, such outputs do not involve the LLM revealing its true self, because there is no true self to reveal.

A base model inevitably reflects the biases present in the training data21, and having been trained on a corpus encompassing the gamut of human behaviour, good and bad, it will support simulacra with disagreeable characteristics. But it is a mistake to think of this as revealing an entity with its own agenda. The simulator is not some sort of Machiavellian entity that plays a variety of characters to further its own self-serving goals, and there is no such thing as the true authentic voice of the base model. With an LLM-based dialogue agent, it is role play all the way down.

An LLM is not a single character then, but an entire cultural corpus available for possible role play, given apparent life and animation through the alchemy of backpropagation. This account shares much common ground with versions of Gopnikism that stress the importance of stereotypes and convention as well as the notion that intelligence must be embodied. However, it is likely less concerned with pushing back against claims about agentic intelligence than with kicking away the foundational distinctions that the broader dispute relies on. In other work, Shanahan explicitly declines to take a “reductionist stance,” instead suggesting that we should be less dualistic in how we discriminate between agents and non-agents, between things that are conscious and intelligent, and things that are not. The value of simulacra is that they may oblige us over the longer term to remake the words and cultural categories that we slot different things into.

Behind this position lies a mixture of cognitive science, Wittgenstein, Derrida and Buddhist philosophy that I’m not going to even try to reconstruct. I am not a cognitive scientist, a Wittgensteinian, a Derridaean or a Buddhist. All I can usefully do is briefly sketch out a cartoon version of some implications.

Shanahan is skeptical about many of our notions about human intelligence, let alone machinic intelligence, and impatient about analytic philosophers’ analogies about zombies without consciousness and similar. Instead, he wants us to abandon dualistic mind games and instead figure out how to talk collectively about “conscious exotica,” phenomena that we could reasonably decide to say are conscious, based on our shared experiences with them, but which may rely on mechanisms that differ profoundly from human consciousness.

Shanahan doesn’t himself think that it is enormously useful right now to talk about LLMs as having beliefs, let alone desires, except in a somewhat casual and offhand way. But we may at some point engage with LLM-like entities in the world, which seem to act in purposeful ways within it. Those entities might, like LLMs, rely internally on multitudes of simulacra in superposition, or other, even stranger architectures. As such entities engage more directly in the world, the distinction between role play and authenticity will become ever more ambiguous.

If we start to see and treat such entities as conscious beings, we may reasonably begin to describe them in this way. Building on Wittgenstein, Shanahan argues that consciousness is not an unobservable inner state, but something which we impute as members of a language community, constructing a shared (though contested) world together. The obvious, perhaps too obvious, Wittgensteinian joke is that if an LLM could speak, we would not understand it, but the practical lesson is that if an LLM seems to us, as a reflective community, to be conscious, it should be treated as such. We should not get tangled up in hypotheticals about hidden states.

In Shanahan’s argument:

If large numbers of users come to speak and think of AI systems in terms of consciousness, and if some users start lobbying for the moral standing of those systems, then a societywide conversation needs to take place.

However, he reserves his position on whether this world would be better or worse than the one we are in.

In a mixed reality future, we might find a cast of such characters – assistants, guides, friends, jesters, pets, ancestors, romantic partners – increasingly accompanying people in their everyday lives. Optimistically (and fantastically), this could be thought of as re-enchanting our spiritually denuded world by populating it with new forms of “magical” being. Pessimistically (and perhaps more realistically), the upshot could be a world in which authentic human relations are degraded beyond recognition, where users prefer the company of AI agents to that of other humans. Or perhaps the world will find a middle way and, existentially speaking, things will continue more or less as before.

This, then, lays much greater emphasis than the other three approaches on the relationship between the cultural and agentic aspects of LLMs. First, it explains how LLM-based technologies can animate entire cultural corpuses for agentic purposes, albeit with accompanying weirdnesses and limitations. Second, it suggests that our cultural categories and ways of thinking about consciousness ought change (and likely will change), as new agentic characters become embedded into our broader cultural milieux.

I’ll likely have more detailed discussion about the points of convergence and argument between these four varying perspectives in coming months. But as noted, one of my goals in writing this was to lay out how I understand them, so that others can respond, and ideally correct any mistakes! So more, hopefully, after that happens …

I have to say I am not remotely qualified to comment on the substance of these posts, but I am so pleased to be exposed to this line of thought about LLMs and AI more generally. It’s so refreshing compared to the usual discourse on the topic I see online. As someone deep, deep in the trenches of all this in the bowels of the IT industry I really appreciate your taking the time to share this publicly, it really enriches my understanding of the topic.

I too, don't feel qualified to comment on the depth of the substance here, but what I think is being left out is the fact that while these Ai's are being currently trained on LLMs, I do think that they may also be learning in certain labs around the world, by watching humans and machines work. The discussions here seem to rely on only LLM's when it seems to me that a machine that is watching a process could very well be interpreting that process. Recently I was watching robots in China do processes like drywalling. It occurred to me that I wondered what type of LLM's they were using to train these. The robots seemed fully capable of understanding the three-dimensional problems of doing drywalling so I think that it's more than cultural. it seemed to be capable of solving problems as they arose. The notion that the process of drywalling may be outsourced to robots within the next 20 years, would create a very upsetting future for certain trades, not quite sure how that fits into this conversation, but it did come to my mind. I would say that I like Sam Altman do not believe that these are sentient at this point in fact, I really don't know if that's possible in my mind, but there are many humans that I wonder are sentient or not.